|

补充例子

获取数据之爬虫程序

一些网站(比如百度,谷歌,人人,阿里巴巴,美团……)都会对外公开他们的api端口,供大众下载数据。

这个例子是用一个爬虫程序从美团网的公共api上下载当日某城市交易的数据,并将其保存为txt格式

import

urllib2 # python 中抓取网页数据的库

import os

a = []

def fetch(url):

http_header = {'User-Agent':'Chrome'}

http_request = urllib2.Request(url, None,

http_header) # 创建一个请求,通过urlopen传入request对象

print "Starting downloading data......"

http_response = urllib2.urlopen(http_request)

# 返回一个相关请求request对象

print "Finish downloading data......"

print http_response.code

print http_response.info()

print "-------Data--------"

beg = datetime.datetime.now()

a.append(http_response.read())

f=open('meituan.txt','w')

f.writelines(a)

f.close()

if __name__ == "__main__":

fetch("http://www.meituan.com/api/v2/shanghai/deals")

# 美团公开的api端口 |

数据分析初步之xml文件解析

quotes_historical_yahoo是一个获取yahoo历史数据的函数,需要输入公司的Ticker

Symbol和查询起止日期,输出为一缓冲文件,具体代码如下:

举得例子是:统计当日在该城市的订单总数

import

xml.etree.ElementTree as ET # 解析xml文件的一个库,不过在网上看到评论说这个库存在安全隐患。

tree = ET.parse('meituan.txt') # 解析我们的文件

meituan_deal_set = []

root = tree.getroot()

for data in root.iter("data"):

deal = data.find("deal")

meituan_deal = {}

if deal is not None:

try:

meituan_deal["deal_id"] = deal.find("deal_id").text

# 寻找根节点

except Exception, exp:

print "No deal id"

try:

meituan_deal["sales"]=int(deal.find("sales_num").text)

except Exception, exp:

print "invalid sales number"

try:

meituan_deal["price"]=float(deal.find("sales_num").text)

except Exception, exp:

print "invalid price"

meituan_deal_set.append(meituan_deal)

print len(meituan_deal_set) |

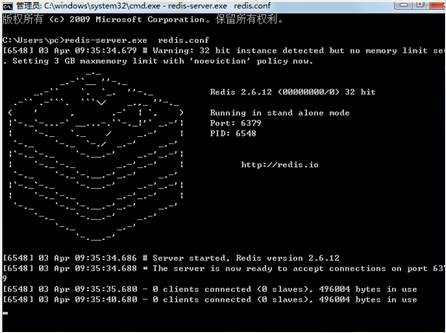

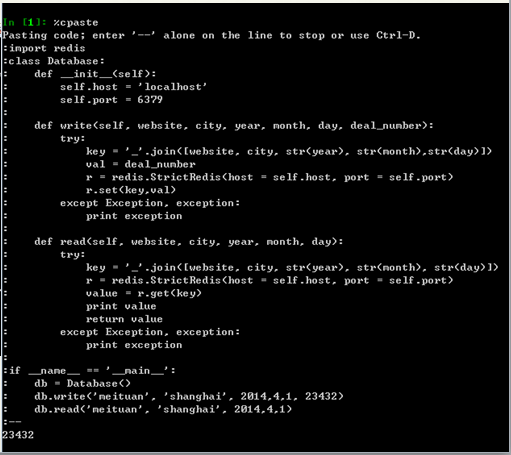

数据的储存与读取

有了数据之后还需要数据库的配合。这里介绍的是用facebook的开源NoSQL数据库–Redis。

在要使用redis之前需要简单装几个东西redis.zip

import

redis

import datetime

#redis-server.exe redis.conf

class Database:

def __init__(self):

self.host = 'localhost' # 设置端口

self.port # 本机默认端口

self.write_pool = {}

self.read_pool = []

def write(self, website, city, year, month,

day, deal_number): #将数据写入数据库

try:

key = '_'.join([website, city, str(year),

str(month),str(day)])

val = deal_number

r = redis.StrictRedis(host = self.host, port

= self.port)

r.set(key,val)

except Exception, exception:

print exception

def add_write(self, website, city, year,

month, day, deal_number):

key = '_'.join([website, city, str(year),

str(month),str(day)])

val = deal_number

self.write_pool[key] = val

def batch_write(self): # 将大量数据一起写入数据库,提升效率

try:

r = redis.StrictRedis(host = self.host,

port = self.port)

r.mset(self.write_pool)

except Exception, exception:

print exception

def read(self, website, city, year, month,

day): # 读取数据

try:

key = '_'.join([website, city, str(year),

str(month), str(day)])

r = redis.StrictRedis(host = self.host,

port = self.port)

value = r.get(key)

print value

return value

except Exception, exception:

print exception

def add_read(self, website, city, year,

month, day):

key = '_'.join([website, city, str(year),

str(month), str(day)])

self.read_pool.append(key)

def batch_read(self):

try:

r = redis.StrictRedis(host = self.host,

port = self.port)

val = r.mget(self.read_pool)

except Exception, exception:

print exception

def single_write():

beg = datetime.datetime.now()

db = Database()

for i in range(1, 10001):

db.write('meituan', 'shanghai', i, 9, 1,

i)

end = datetime.datetime.now()

print end-beg

def batch_write():

beg = datetime.datetime.now()

db = Database()

for i in range(1, 10001):

db.add_write('meituan', 'shanghai', i, 9,

1, i)

db.batch_write()

end = datetime.datetime.now()

print end-beg

def single_read():

beg = datetime.datetime.now()

db = Database()

for i in range(1, 10001):

db.add_write('meituan', 'shanghai', i, 9,

1)

end = datetime.datetime.now()

print end-beg

def batch_read():

beg = datetime.datetime.now()

db = Database()

for i in range(1, 10001):

db.add_read('meituan', 'shanghai', i, 9,

1)

db.batch_read()

end = datetime.datetime.now()

print end-beg

if __name__ == '__main__':

#single_write()

batch_write()

#single_read()

batch_read() |

代码比较长,附图看一下

|